A2A and the Real Problems of Enterprise Agentic Systems

Lessons from building and operating A2A in production systems.

Enterprises do not ask whether a protocol can power a demo. They ask whether it can power a platform.

When we first looked at A2A, we were asking whether it could support real systems, not just simple interactions.

Questions like:

Can different teams build agents in different stacks and still interoperate?

Can you understand and replay what agents did and why?

Can agents access internal systems in a secure, policy safe way?

Can you route, secure, and observe traffic without introspecting payloads?

Can you stream partial results without breaking timelines?

Can you evolve implementations without breaking existing agents?

Can you support multi-agent orchestration patterns?

These are the questions that show up when agentic AI moves beyond demos and into production.

Some teams hit them because they genuinely need interoperability. Others hit them because leadership wants a shared AI standard.

In both cases, A2A is the most serious contender.

MCP solved integration. A2A is solving systems

MCP fixed a real enterprise problem.

Internal services were useful but hard to expose to AI. You needed technical context, tribal knowledge, and manual glue. Only a few engineers could work with them.

MCP changed that. It made internal services easy to wrap, easy to consume, and easy to extend.

Clear inputs. Clear outputs. One request. One response.

Perfect for tools.

Agents do not work like that.

Agents plan. They reason. They maintain state. They call each other. Their interactions are multi-turn, long-running, and open-ended.

MCP solved a narrow, well defined problem.

A2A tries to solve a wide, messy one.

It picks up exactly where MCP ends.

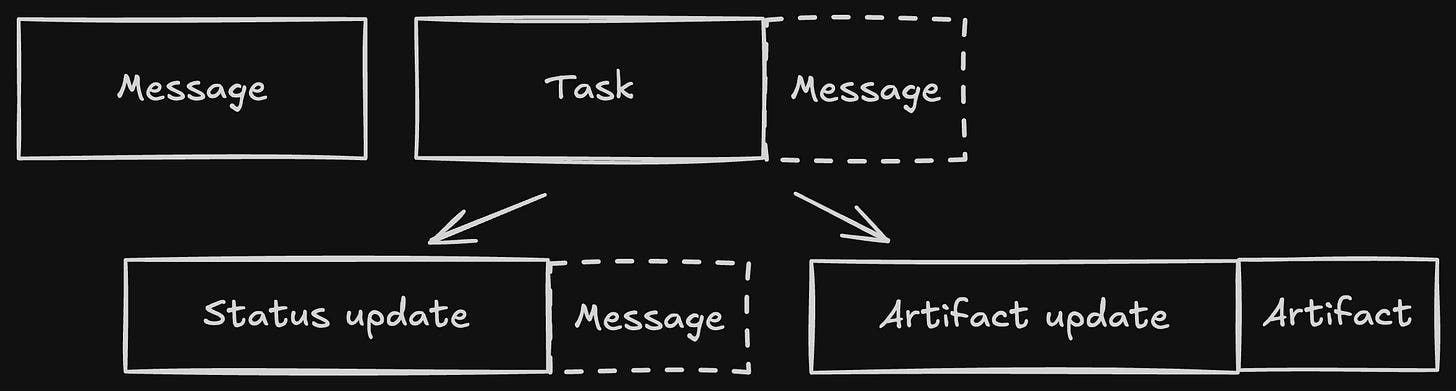

As soon as you move from “use a tool” to “work with another agent”, you need progress updates, lifecycle management, partial results, history, artifacts, and a shared understanding of how a task unfolds.

This is the gap A2A tries to fill.

What breaks when real systems adopt A2A

When we integrated A2A into Agent Stack, the problems did not appear at the beginning.

They surfaced as we built more agents and when other teams started writing their own agents.

Real systems surface problems that demos hide.

Here are the ones that mattered most.

1) Ambiguous semantics break interoperability

A2A treats Message and Artifact as separate concepts, but the boundary between them is unclear.

The same output can be modeled in multiple ways. Teams pick different patterns.

When semantics are unclear, implementations drift. SDKs try to support every variation, and interoperability suffers.

Streaming exposes this even more.

Because Messages cannot stream properly, developers are forced to use Artifact updates even when the content is not an artifact at all.

These are not theoretical issues.

They show up the moment multiple teams build agents independently.

We documented six related pain points and a path forward here:

👉 https://github.com/a2aproject/A2A/issues/1313

If you are hitting similar problems, a 👍 helps signal it to the steering group.

2) JSON-RPC does not fit enterprise routing

JSON-RPC puts routing metadata into the payload.

Enterprise routing needs it in headers.

Inspecting bodies is expensive, slow, and often impossible.

Duplicating metadata in both places creates drift and risk.

We hit this in 2023 while working with Red Hat on an inference service that routed model requests across data centers.

(That time we didn’t use JSON-RPC, but the problem was the same.)

Yes, we questioned JSON-RPC early on.

Some use cases may require non-HTTP transports, but the problem remains:

Enterprise-grade routing cannot rely on metadata inside payloads.

3) Extensions are an escape hatch, not interoperability

Extensions are useful. We rely on them.

Our Service Dependency Injection idea started as one:

👉 https://github.com/a2aproject/A2A/discussions/962

But extensions create dialects.

The moment two teams depend on different extensions, they are no longer speaking the same protocol.

They are speaking local variants based on assumptions and internal constraints.

Extensions are fine early on.

They cannot replace protocol design.

Use them wisely.

4) A2A is low level and lacks strong opinions

To build anything non-trivial, you need to understand multi-turn task lifecycle, skills and content negotiation, event queues, and state transitions. The protocol exposes all of these, but many of the semantics around them are loosely defined.

This creates real ambiguity. For example:

In a multi-turn conversation between agents, is every turn a new

Task, or is it one long-runningTaskwith many events?When exactly is a

Taskconsidered “completed”? After the final message? After the final artifact? Only when the executor decides so?When should something be modeled as a

Messagevs anArtifact?Can artifacts still be streamed after a task has already completed?

Small differences in how teams interpret these do not break demos, but compound as you scale, especially across teams or services.

The ecosystem would benefit from stronger default opinions.

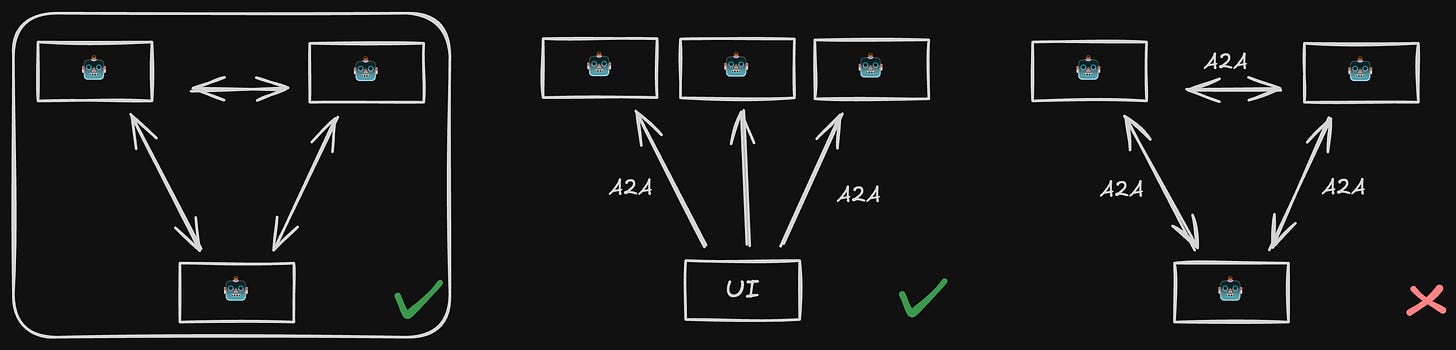

5) Most teams are not there yet

This is not a criticism. It is a reality.

Most teams still run:

one agent, or

a small cluster of agents inside a shared runtime, or

A2A as a way to standardize shared UI or build an internal agent catalog.

Very few deploy agents as a distributed system across teams or services.

As a result, A2A often gets adopted for simpler use cases.

These are not what A2A was designed for.

The danger is that A2A hardens around early, non-representative use cases.

As more distributed agent systems emerge, A2A will need to adjust.

And early adopters may need to revisit choices.

How platform teams should approach A2A

Be honest about what you will actually need in the next 1–2 years.

Choose one internal semantic model and stick to it.

Drift happens when every team interprets the spec differently.Treat A2A as maturing infrastructure.

Plan for change. Expect some rewrites.

Build with loose coupling so you can switch out parts.Use A2A where it helps, not where it complicates.

It shines in multi-agent orchestration and long-running tasks.

It might be heavy for simple use cases.Accept that complexity comes from the work, not the spec.

Long-running tasks, shared timelines, partial results, and agent coordination are genuinely hard problems. Distributed systems problems.

Even with gaps, A2A is the strongest shared standard we have.

The important part is giving feedback when the protocol gets in your way and sharing what real systems expose.

That is how the spec improves.

This piece realy made me think about the shift from demos to production. You always have such a clear take on these enterprise AI challenges, like your previous posts. A2A vs MCP, it just clicked.